- #The node was low on resource ephemeral storage install#

- #The node was low on resource ephemeral storage free#

The Need For Ephemeral Containersīaking a full-blown Linux userland and debugging tools into production container images makes them inefficient and increases the attack surface. Make your Slack alerts rock by installing Robusta - Kubernetes monitoring that just works. For example, CrashLoopBackOffs arrive in your Slack with relevant logs, so you don't need to open the terminal and run kubectl logs.

#The node was low on resource ephemeral storage free#

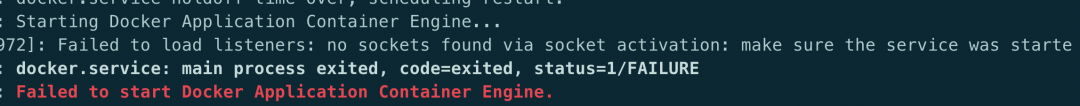

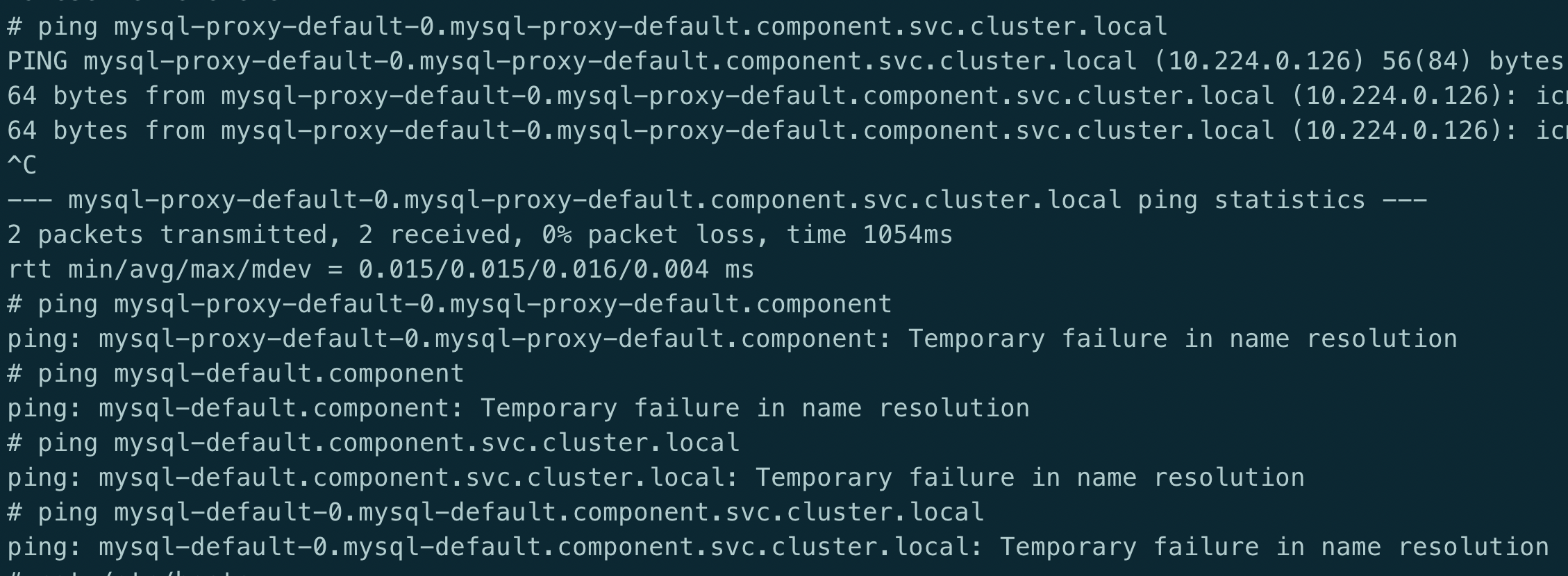

I remember there was a time when I didn’t have any free space because of one vulnerabilty checker Docker Desktop Extension that used about 40 gigabytes, and the last error message indicates that those containers stopped because of the lack of space, but I guess some other errors could happen too which causes some pods not to start, so next time you can check if kubernetes works but one or two pods can’t start so you can fix those and restart Docker Desktop.Robusta is based on Prometheus and uses webhooks to add context to each alert. There was nothing to recreate them like deployments, so I restarted Docker Desktop and Kubernetes was green again. Kubectl delete -n kube-system pod/vpnkit-controller I could not start these pods so I did deleted them: kubectl delete -n kube-system pod/storage-provisioner Normal Killing 48d kubelet Stopping container storage-provisionerĪs you can see it was long time ago and there was no other events (just older). Warning Evicted 48d kubelet The node was low on resource: ephemeral-storage. Since no container was running in those pdos, I couldn’t have check the container logs, but I could check the description of those pods with the events: kubectl describe -n kube-system pod/storage-provisionerĪt the bottom of the output it was the end of the events: Normal Started 48d kubelet Started container storage-provisioner Kube-system pod/vpnkit-controller 0/1 ContainerStatusUnknown 1 (48d ago) 51d Kube-system pod/storage-provisioner 0/1 Error 0 51d Kube-system pod/kube-scheduler-docker-desktop 1/1 Running 2 (6m25s ago) 51d Kube-system pod/kube-proxy-kx498 1/1 Running 2 (6m25s ago) 51d Kube-system pod/kube-controller-manager-docker-desktop 1/1 Running 2 (6m25s ago) 51d Kube-system pod/kube-apiserver-docker-desktop 1/1 Running 2 (6m25s ago) 51d Kube-system pod/etcd-docker-desktop 1/1 Running 2 (6m25s ago) 51d Kube-system pod/coredns-6d4b75cb6d-x2q5r 1/1 Running 2 (6m25s ago) 51d Kube-system pod/coredns-6d4b75cb6d-nhxh4 1/1 Running 2 (6m25s ago) 51d Where I saw some pods could not start: NAMESPACE NAME READY STATUS RESTARTS AGE

The node was ready, so I ran kubectl get all -all-namespaces Kubernetes could not start according to the Desktop and it even went to red indicating that it failed. I had a new experience with Kubernetes and Docker desktop. I hope the steps I described above will help you too. It should recreate the config file but I am not sure about that so don’t start with this. If you see everything is running and if my solution doesn’t work for you, you can also delete the ~/.kube/config and try the steps again. So I recommend you to enable showing Kubernetes containers from terminal and check the containers from there. I still don’t know wwhat is the reason of this issue, but I don’t remember more then one case when I had to reinstall Docker Desktop on my Mac. Then I went back to the Desktop and it showed me “Running”. Last time I saw it was “starting” for minutes, so I went to the terminal and listed containers. To be honest, I am not sure if it is Kubernetes which cannot start or only the Desktop cannot tell me if it has already started.

This is what I do when Kubernetes can’t start: First time I gave up and some days later I tried something again and worked

#The node was low on resource ephemeral storage install#

In some cases it happenes multiple times in a day when I change configurations and install alternative softwares. I am sure it is not true, because I had this problem multiple times recently.The only way to resolve this is a complete wipe and reinstall.

0 kommentar(er)

0 kommentar(er)